Getting Started With Hadoop & Big Data in Cookiepedia

02 Oct 2014

Background – What Is Hadoop?

Like Big Data itself, there has been an awful lot of hype around Hadoop, and acres of not particularly accessible content. One Fun Fact is that the Hadoop handle itself came from the name of a yellow toy elephant owned by the son of one of its inventors.

So far, so cute, but what does it do and where did it come from? Here goes: Hadoop is an open source software framework for storing and processing big data in a distributed fashion on large clusters of commodity hardware.

Essentially, it accomplishes 2 tasks: massive data storage and faster processing.

Hadoop originally came into being at the same time Google was working through the problems of regularly indexing the entire web through automated, distributed data storage and processing – what we now call ‘The Cloud’.

In 2008, Yahoo hired the inventors and released Hadoop as an open-source project, and today its framework and technologies are managed and maintained by the non-profit Apache Software Foundation (ASF), a global community of software developers and contributors.

As software developers ourselves and trainee ‘Data Scientists’ we decided to use our own Big (ish) Data to road-test Hadoop and see if it could help us – this is what we discovered.

Cookiepedia – Big Data & Our Problem

Since 2011 we have collected data about the use of Cookies on public websites. This is anonymous data comprising the names and properties of cookies that websites write to client machines during browsing. Our records include changes to the cookie footprint over time. More recently we have started to collect data about tags, local storage and forms on websites.

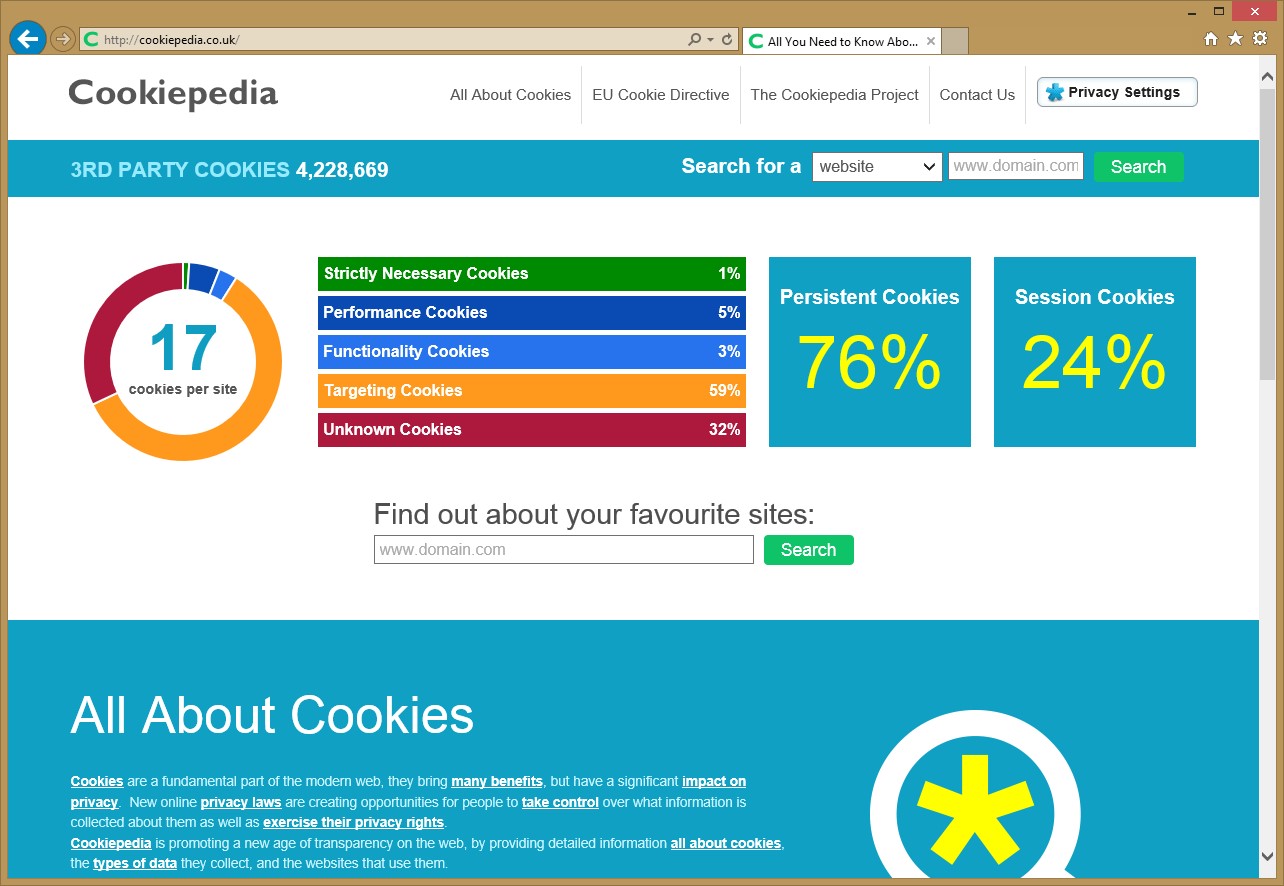

www.Cookiepedia.co.uk is our website which surfaces this data as a ‘dashboard’ for the general public interest. It allows you to search by website address, host domain or cookie name and discover information about cookies - their purpose and which websites they appear on.

Here’s what it looks like:

Cookiepedia works on aggregated statistical data based on the raw cookie collection logs. The aggregated data is currently collected through an incremental process by reading new cookie records from the collection database and writing them into an index database.

The problem with this approach comes when we want to add a new metric or change the way the data is collected - in that case we need to do a full re-index of all the data, and because it is based on standard SQL Server technology the re-index is slow. It can take a week to re-aggregate all the domains in the database (currently 11 million and counting).

This is a classic example of a problem that could be solved by 'big data' techniques - most notably the use of tools that allow you to process the data in parallel to speed up results generation and allow scaling out across larger clusters of machines. We therefore started to look at the most popular tool in this area - Hadoop - as a potential tool to replace the current aggregation process with a faster and more scalable approach.

What we Did & The Results of our Hadoop Experiment

Rather than set up the infrastructure to run Hadoop ourselves, we took advantage of Microsoft's new cloud Hadoop offering, HDInsight. This is basically Hadoop plus a bunch of related tools in the cloud. It has the advantage that you can pay for clusters by the hour and provision / tear them down easily.

Normally, Hadoop uses its own filing system to store data (HDFS) but HDInsight allows you to store your data in cloud storage so that it persists beyond the lifetime of the cluster you use to process it. This means we can script a scheduled task that creates the Hadoop cluster, runs our aggregation jobs and shuts down the cluster again. We can therefore control the cost by controlling the schedule and size of cluster.

To create a useful data aggregation job, we needed to achieve the following steps:

1. Automate the provisioning of a Hadoop cluster

2. Import the data into the cluster from SQL Server in a format we can work with

3. Run the data aggregation processes on the data as fast as possible

4. Export the aggregated index data back to SQL Server

5. Shut down the cluster (as we pay by the hour)

HDInsight comes with an extensive set of scripting commands which allow you to automate all of its functions, so using those we were able to script out a job to provision a new HDInsight cluster with a given number of nodes and properties.

The result is we were able to reduce the full re-process time from over a week to just 30 minutes, and we now have a scalable system that will scale out to handle larger datasets in future.

The main advantage for us of using this technique is that we can tweak the aggregation queries and fully reprocesses the data quickly, which means we can make global changes to the search results without having to wait for data to feed through the old incremental process.

So, if you can cut through the general noise about Big Data, and instead focus on the problems you have right now, the combination of Hadoop and Pay-As-You-Go Cloud can get your data working much harder.

Please login to comment.

Comments