7 steps to successful optimisation testing

04 Apr 2014

If your organisation is just getting started with optimisation testing and you are lucky enough to be a part of that journey, you may feel overwhelmed. Just like any venture, creating a plan and sticking to it helps remain focused on the larger goal even when individual steps prove challenging. A transparent process also creates accountability and benefits both the core testing team and the larger organisation.

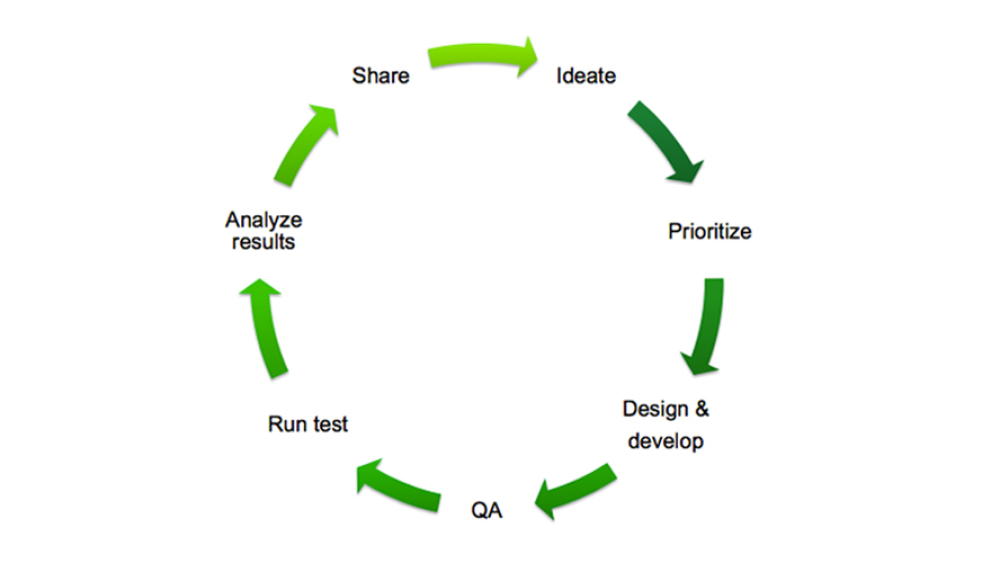

Here’s a seven-step process that most testing teams can adopt:

1.Ideation

This is the top-of-funnel stage in which ideas are gathered. Some will be obvious, others less so. If in doubt, ask these questions to identify good areas to look at for testing ideas:

- What is the main goal of the website?

- What are the key flows users go through to accomplish that goal?

- Where are the drop-offs occurring?

There is value in gathering a wide pool of ideas. At companies where testing is already part of the culture, ideas can be sourced from all sides of the organisation as well as from users and clients.

2. Prioritisation

Realistically, no team or organisation can test every single thing that comes to mind. After a pool of ideas has been gathered, those ideas have to be prioritized to determine what to test first.

The Action Priority Matrix is very helpful in tackling prioritisation:

Once you’ve plotted all ideas in the quadrants based on the effort required to run each test, including any necessary development and design resource, and the impact you expect it to have, it becomes obvious where to start.

3. Design & development

This is the stage where the test comes to life. Some tests will require design or development resource while others will be possible to create independently. If you’ve plotted your ideas on the Action Priority Matrix, you should be able to differentiate between the easier to create quick wins and the more resource-intensive major projects.

4. QA

Quality assurance is key when making any changes to a website. It is possible for a non-technical tester to do QA if they have the right tools (virtual machines, devices, understanding of browser exceptions, etc.) but it is much safer to follow the established QA process to avoid unexpected trouble. Alert your QA team about tests you are preparing to run and get their thumbs up before making any changes to the site. They’ll thank you for it.

5. Testing

The test is finally live! Be patient. The duration a test needs to run for to reach statistically significant results depends on the sample size, the conversion rate and the difference between variations. Give your test enough time to reveal insights you can use. Strive for a confidence level of 95% or higher.

6. Results analysis

Analysing test results can be a very rewarding part of the testing process. When evaluating a test, keep a few things in mind:

- Statistical significance: look for a confidence level of 95% or higher

- Micro-conversions vs. macro-conversions: when testing to improve micro-conversions (e.g.: clicking a button on a registration form, clicking a link in a promotional email), monitor the effect on macro-conversions, i.e. the site’s main goals or key metrics. Clicks and conversions are not always correlated and failing to track through to conversion creates the risk of optimizing against your key metrics

- Segmentation: if your users come from different markets, geographies or have a variety of experiences on your site, it’s a good idea to segment test results to identify any key differences or patters. This is especially relevant when you test something that may significantly alter the experience for existing users – segmenting for new vs returning users can reveal valuable insights into site usage and user flows

- If a test fails segmentation is your best friend. Take a hard look at the performance of key segments and identify any major differences. Form a hypothesis as to why the test failed. A failed test will often reveal as much insight as a successful test and might inspire further drill-down exploration

7. Documentation and sharing

Whether they succeed or fail, well-executed A/B tests carry insight worth keeping and sharing. Keep everyone in the loop:

- Communicate synthesised learnings to stakeholders to give the testing team credit for their work and keep the momentum going in terms of optimisation and development

- Create a repository of easy-to-read records of past tests and results that can be used to inform future work and to easily introduce new team members to the history of testing

- Spread the word across the wider organisation to continue generating excitement about optimisation and foster a culture of testing throughout the business

By DMA guest blogger Savina Velkova, Audience Engineer, BrightTALK

Please login to comment.

Comments